Remarkable Handwriting with Vertex AI

April 19, 2024

Some Background: The Remarkable tablet

The Remarkable is an e-ink powered device that leans into writing as an experience. I often refer to it as the “infinity notebook”: in the UI you create notebooks which have pages, which you write on. Instead of a stack of notebooks I have my Remarkable.

Important to my purchase was the ability to get information out of the Remarkable. The Remarkable app, on desktops and iOS, lets you export Remarkable notebooks or individual pages to PDF, PNG, and SVG. In fact, that later one enables easy migration of handwritten drawings to OmniGraffle.

So far, for the most part, the features of the Remarkable supplement that focus. You can - and I do - enter text into the Remarkable via an onscreen or (proprietary) keyboard, and it can do handwriting recognition and conversion to text.

For my handwriting the conversion to text is OK, but not great. As such I rarely use it.

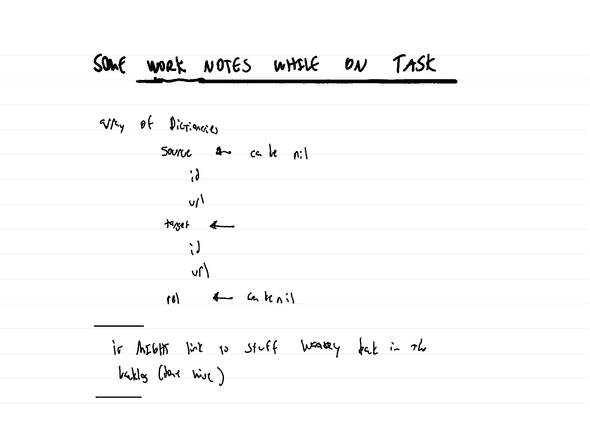

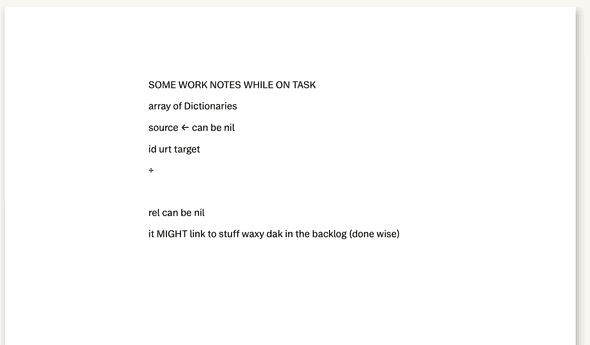

An example:

Remarkable’s Handwriting recognition output:

Not great.

Using GenAI for better handwriting recognition

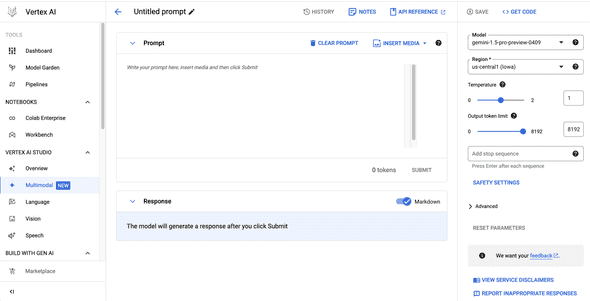

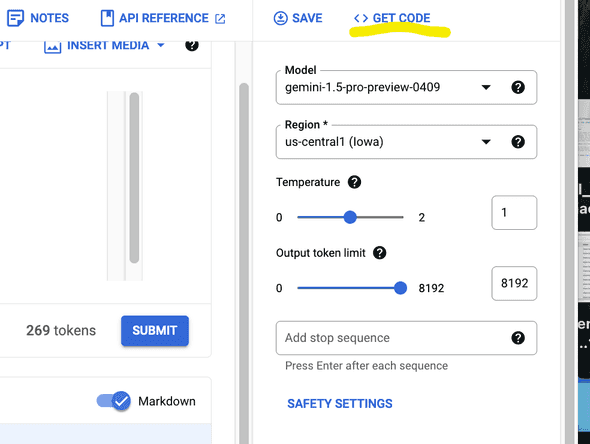

The other day I was exploring Vertex AI on Google Cloud

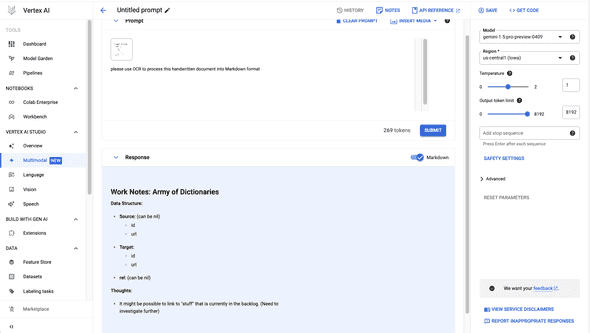

Huh, I can upload media. I tried it with my sample page

If I adjust the temperature - which in Google-speak means creativity - I can (sometimes) get the AI to extrapolate from the information given. Most of the time I don’t want this, but it is a knob I could tweak.

Next Step

Then I asked myself: if Google can do this, can I write a shell script that runs this prompt with an arbitrary file, from my desktop?

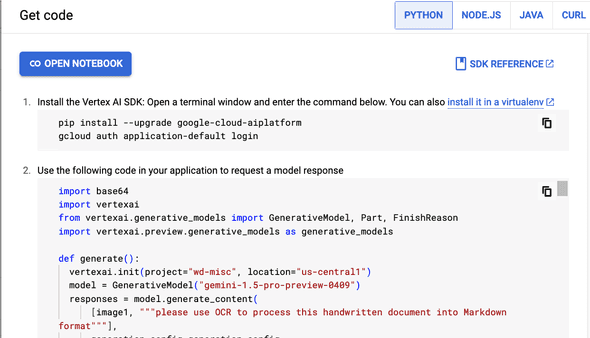

After some flailing around I notice the Get Code button.

This reproduces your prompt in code, including what libraries you need to install

This also includes any attachments, saved in your code as base64 encoded data.

Doing it yourself, the final script

With some minor modifications I get the following script:

import sys

import base64

import vertexai

from vertexai.generative_models import GenerativeModel, Part

import vertexai.preview.generative_models as generative_models

PROJECT = "FILL ME IN HERE"

def multiturn_generate_content():

vertexai.init(project=PROJECT, location="us-central1")

model = GenerativeModel(

"gemini-1.5-pro-preview-0409",

)

chat = model.start_chat()

result = chat.send_message(

[document1_1, text1_1],

generation_config=generation_config,

safety_settings=safety_settings

)

print(result.candidates[0].content.parts[0].text)

file_path = sys.argv[1]

document = None

with open(file_path, "rb") as f:

document = f.read()

document1_1 = Part.from_data(

mime_type="application/pdf",

data=document)

text1_1 = """please use OCR to process this handwritten document into Markdown format."""

generation_config = {

"max_output_tokens": 8192,

"temperature": 1,

"top_p": 0.95,

}

safety_settings = {

generative_models.HarmCategory.HARM_CATEGORY_HATE_SPEECH: generative_models.HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

generative_models.HarmCategory.HARM_CATEGORY_DANGEROUS_CONTENT: generative_models.HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

generative_models.HarmCategory.HARM_CATEGORY_SEXUALLY_EXPLICIT: generative_models.HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

generative_models.HarmCategory.HARM_CATEGORY_HARASSMENT: generative_models.HarmBlockThreshold.BLOCK_MEDIUM_AND_ABOVE,

}

multiturn_generate_content()

Which works!

Conclusion

The Google Vision API gives the ability to more directly interact with known quantities of documents, or if I provided my own data set. For example, the Vision API had templates for bills, bank statements, and forms. If I had more specific needs (translating a handwritten form 100 times a day, for example) I may look into those APIs. For now letting generative AI figure it out with a prompt and a given PDF file works great!