DuckDB and GToolkit

July 14, 2024

DuckDB Explorations: Approaching data persistence in exploratory situations

In software, I believe exploration and experimentation means a delicate balance of just enough infrastructure, easily changed decisions, simplicity until the last possible moment, and as small a complexity surface area from your tools as you can manage.

In my work using GToolkit as a software analysis platform I find myself with sections of data extracted from a larger data source. Quite often I’ll shove that subset of data into a Dictionary, I don’t need a class yet - as I haven’t discovered the behaviors or operations I want to perform on that data - but I do need to gather data.

If it’s a complex analysis - creating lots of structures from a bunch of sources - I may shove everything into a big Dictionary data soup - usually a Dictionary of a string key and a value being that defined structure dictionary. This gives me a handle around all of the data and lets me use functional methods like select and collect to aggregate the data into bigger chunks.

Smalltalk gives us a few ways to persist that data: exported as JSON, exported as STON, saved in the image itself, or database frameworks like GLORP. Most of the time - since this work is exploratory, lightweight, and unlikely to be used anywhere close to production, the storage mechanism can also be lightweight. Lately my favorite hack is persisting my data soup to STON files.

(I have, in the past, played with Tinkerpop and graph databases my store for my soup, but running a data store server all the time may be overkill).

Serializing Dictionaries as STON (SmallTalk Object Notation, a Smalltalk specific JSON-alike format) is certainly the lightest thing that could possibly work: It’s certainly KISS. Assuming - to steal some modern data store design terms - we think of the structures involved as documents, not relational data. While STON is better than JSON about handling structures and circular references I’ve recently had some problems with serializing very large Dictionaries. I may also want to relate objects.

So: sometimes I need a lightweight, easy to create and throw away, not a lot of ORM fanciness, relational data store.

Enter DuckDB

DuckDB is an “in-process analytical database” with no external dependencies, portable, with import functionality for JSON, CSV and Parquet. In addition to libraries for a bunch of languages there’s a CLI tool also.

(It’s not precisely “in-process”, as you can save your databases to files).

Let’s load some JSON up.

In earlier articles I’ve done analysis on the planets in Star Wars movies. I have a JSON file which is an array of dictionaries: one dictionary for each planet, each dictionary having the same fields. I’ve exported this data to results.json.

duckdb -json -c "INSTALL json; LOAD json;" -c "SELECT * from read_json('results.json');

Here we install the json plugin, and run a select statement on the JSON!!. (DuckDB derives the field names (and types) based on the shape of the JSON document.)

Louder for those in the back: This lets us use SQL on JSON data!!!

By default DuckDB outputs a little ASCII table on the CLI. We can output in JSON too, using the -json flag. (spoilers)

Let’s get a bit fancier

/opt/local/bin/duckdb -json -c "INSTALL json; LOAD json;" \

-c "CREATE TABLE planets AS SELECT * from read_json('results.json');" \

-c "SELECT name from planets"Here, instead of loading the JSON into a temporary table, we save it into a named table, then select all the planet names. We could load up a bunch of JSON files and do a join, perhaps.

DuckDB and GToolkit: an example

The primary way of interacting with GToolkit is through Lepiter, a notebook style interface that fixes text and code together. In fact, the code may be in different languages!

jsonFileLocation := '/Users/rwilcox/Temp/results.json'The interesting part about Lepiter notebooks is that variables and results can cross language boundaries! We can write a SQL statement and bind it to a variable in Smalltalk!

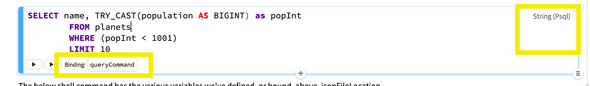

SELECT name, TRY_CAST(population AS BIGINT) as popInt

FROM planets

WHERE (popInt < 1001)

LIMIT 10How it shows up in a Lepiter page:

Some notes:

- See the Binding item (highlighted)? The string is accessible through that variable now.

- Notice the color coding? We’ve defined it also! (Also highlighted!) syntax highlighting won’t totally be correct, as PSQL <> SQLITE/DUCKDB SQL, but shrug_

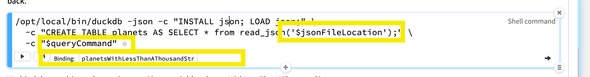

Now that we have the file location variable, and the SQL we want to run, we use the shell script snippet to run it, including those variables!!

The below shell command has the variables we’ve defined, or bound, above. jsonFileLocation and (the configured) queryCommand for the SQL. (Here’s that -json flag! We want machine parseable results back!).

/opt/local/bin/duckdb -json -c "INSTALL json; LOAD json;" \

-c "CREATE TABLE planets AS SELECT * from read_json('$jsonFileLocation');" \

-c "$queryCommand"Note we bind the resulting stdout string as a Pharo variable, planetsWithLessThanAThousandStr.

Now some Smalltalk code to turn it into an object:

NeoJSONReader fromString: planetsWithLessThanAThousandStr.

Which will return our results as an array of Dictionaries.

All this work is hard - let’s save our database

/opt/local/bin/duckdb -json -c "INSTALL json; LOAD json;" \

-c "CREATE TABLE planets AS SELECT * from read_json('$jsonFileLocation');" \

-c "EXPORT DATABASE '/Users/rwilcox/Temp/planets.db'"And Load it back

/opt/local/bin/duckdb -json -c "IMPORT DATABASE '/Users/rwilcox/Temp/planets.db'" \

-c "SELECT * FROM planets LIMIT 100"Conclusion

DuckDB is a great, standalone, cross-platform database store, and it’s even accessible from GToolkit if your data outgrows the simple things in life (simple serialization or just saving the data in the image itself!)