Artifacts and look towards Software Logistics

October 21, 2024

The term “software artifact” is often seen as being about only the runnable bits of a program: what’s the steps to getting ideas to bits running on machines. However, as you move through aspects of the software development lifecycle various (non-executable) artifacts are created - even in Agile environments!

To me the important part about any artifact created is that it has a use, and helps the process along if created at the correct time. This blog entry will enumerate the common artifacts in software development, their dependencies, and usages.

Additionally, if we understand the dependencies or usages of these artifacts, we can avoid bottlenecks in our software development lifecycle, making teams more efficent in shipping software.

If we understand the artifacts, and their dependencies, we may be able to improve the logistics of a team: their software logistics.

Artifacts and information

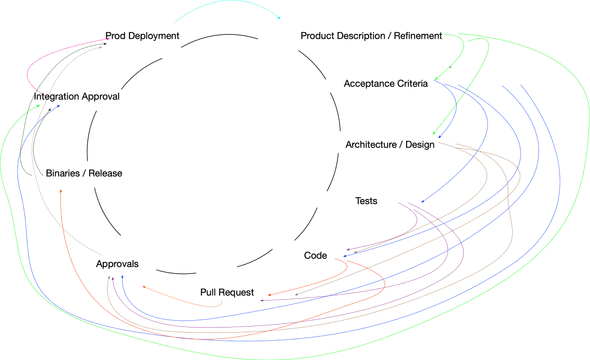

The below tries to diagram the flow, dependency, and raison d’être, of ARTIFACTS, not general development workflows (click to expand).

While an individual ticket may be part of a larger planned layer cake we are going to focus on a single ticket.

Let’s briefly iterate the listed artifacts:

- Product Description / Refinement

- Acceptance criteria

- Architecture / (technical) design

- Tests

- Code

- Pull Request / code review phase

- Approvals

- Binaries / Release

- Integration Approval

- Prod Deployment

Product Description / Refinement

INPUTS: (previous) prod deployments

This could be called a User Story, a Product Requirements Document, or just a description in the body of the ticket. It is likely created by iterating on the current user interface or product offerings, but also could be backed up by user/product research, or from support requests.

This may also result in a wireframe: the design of the UX. (I considered adding wireframe to the below “Architecture / Design” result, but often wireframes inform the acceptance criteria)

In short: it’s what business and/or product want to change.

Acceptance Criteria

INPUTS: Product Description / Refinement

The acceptance criteria gives all parties a shared definition of what “good” means, in regard to this ticket. Stereotypically this is what product needs from the work, but I believe this should also include items and inputs from all the stakeholders. For example, the team lead or SRE stakeholders may have acceptance criteria around responsiveness, monitoring, security, or architecture.

If we are writing a registration form, maybe we have a few acceptance criteria:

- Given the signup form, when the user enters 2 or more characters as a firm name then the form should be valid

- Given the signup form, I won’t let a user sign up that already has a login

… etc etc…

Architecture / Design

INPUTS: Product Description, Acceptance Criteria

Once you understand the high level work that needs to be done, the developers on the team need to agree on how to do that work. Sometimes this comes as a formal technical design document, but may be notes taken during a meeting. This doesn’t have to be formal, although it can be: it can be 2-3 sentences about the technical problem and the solution, to full RFCs.

The work here is three-fold: drive consensus of your approach, document it for your future selves, and as a way to anchor effort estimation (if performed by your team).

Tests

INPUTS: Acceptance Criteria

I prefer tests that include an aspect of behavior driven development. While some automated tests will be about technical implementation, ideally some tests should test that the code implements the acceptance criteria. In fact, I prefer situations where the exact text of the acceptance criteria can be found easily when reviewing the code in the Pull Request!

I would argue that statements like the following are pretty readable by anyone on the team:

@Test("Given the signup form, when the user enters 2 or more characters as a firm name then the form should be valid")

void testFirstNameCharacterLength() {

...

}With a little bit of focus the acceptance criteria (“Given the signup form, when the user enters 2 or more characters as a firm name then the form should be valid”) appears, relatively easy to see outside all the punctuation. I really like test frameworks that print all these statements out as part of test output.

Theoretically you should be able to write at least test stubs for test behavior this early, or at least understand the size of the changes to test this new work. Sometimes completely writing the tests first are impractical, but the tests may drive the design of the code.

For more manual testing scenarios, a test plan could be created here.

Code

INPUTS: Acceptance Criteria, Architecture / Design, Tests

self explanatory.

Pull Request

INPUTS: Product Description / Refinement, Architecture / Design, Tests, Code

To me the pull request workflow popularized by Github conflates two things in mostly good ways, in a handy interface. First, a code review allows peers to check the code for obvious bugs. Secondly, it acts as an incremental knowledge transfer session: this new thing works because we’ve made changes to these however-many files and methods.

In particular the body of the pull request should link to the Product Description / Refinement (usually “the ticket”), as well as the Architecture / Design the pull request implements. We want people looking at version control history to be able to backtrack to these artifacts in particular! (Also one reason why I prefer squash merges in git!)

If I’m playing the architect role, one of the things I look at during code review is “does it do what the developer said they were going to do, in about the way they thought they were going to do it?“. Sometimes plans don’t work out, but sometimes requirements are just missed (or not figured out until one sees it in real code!). Usually this results in a productive conversation one way or the other!

If I’m playing a technical lead role, one of the things I’m looking for is tests that match the acceptance criteria, when possible. The more obviously called out the better, as I may not be able to quickly translate formFirstNameValidation into our acceptance criteria about 2 characters. (I can once I dig into the code, but it’s not obvious)

Approvals

INPUTS: Product Description / Refinement, Acceptance Criteria, Architecture Design, Tests, Code, Pull Request

Depending on your company’s Software Development Lifecycle - and regulatory environment - there may lots of formal signoffs here. Or maybe you just need one other person to approve the pull request with passing automated tests: that’s an approval too.

In a highly regulated environment, you may need to collect certain previous artifacts to proceed through higher level environments. In a mostly manual setup this means the Approvals artifact may become a bottleneck if not managed correctly. For example, imagine a workflow involving a tech lead sign off, an architect sign off, product owner signoff, and QA sign off: a relatively typical set of 4 signoffs. (Throw in security signoff and vulnerability management signoff and you really have a party!). If managed poorly this “approvals” artfiact can turn into a fire drill of sudden requests for approval and people feeling like they can’t reject something because of time pressure or “well, it’s done already, but wish they would have talked to me sooner because yiiiiiikkeeeesss”. Or unexpected surprises when an expected approval turns into a rejection last minute.

In an environment with manual sign-offs, this is where a good Release Engineer may be valuable, to reduce surprises, bottlenecks etc. In a more automated environment many of these can be gathered and confirmed by a well created CI/CD pipeline.

The artifact, in this case, is “we have approvals from everyone we need to get them from”.

Binaries / Release

INPUTS: Code

However you package your application up, be it plain binaries, libraries, Docker containers, executables, whatever.

Integration Approval

INPUTS: Product Description / Refinement, Acceptance Criteria, Binary Release

This step pre-supposes that the binaries have been deployed to a real environment or two. Does it actually work in the running system, with everything else going on? Both does it work technically, does it accomplish the business or product objective, and sometimes does the UX actually feel right? Better to learn this here than when publishing to the customer.

The result of that testing is the Integration Approval: the “Yes, ship it!”

Prod Deployment

INPUTS: Approval, Binaries / Release, Pull Request, Integration Approval

The Binaries / Release is in the prod environment! There may be release notes to type up, or an announcement to make!

Commentary

I’ve tried to make this list of artifacts as universal as possible. As such, there’s at least one artifact I’ve left out - probably more depending on your exact scenario. Notably absent to the Scrum organization is estimation: how complex of an effort this item is. If you do care about Estimation, and see it as an artifact, its inputs are Product Description / Refinement, Acceptance Criteria, Architecture / Design, and Tests

To me, the ideal here is for the technical design and tests to be written (or sketched out) before any estimation happens: estimating an item with no idea how you’re going to do it feels like a recipe for failure to me!

At a larger technical program management level, the Integration Approval step may be blocked by a bunch of tickets getting their Approvals: you wrote a ticket for the backend work and the frontend work, for example. Cool, neat, as long as you’ve identified that process and made it transparent to stakeholders, sounds like a reasonable way of working.

Noticing and controlling the bottlenecks

If we understand the artifacts, and their ideal dependencies, we can attempt to move our organization towards a better state. Maybe this is sorting our dependencies a bit, maybe this is scaling specialized expertise. Maybe a campaign to examine the gestalt of the software development environment is in order: is there anything the organization can take from The Phoenix Project (my notes), Ask Your Developer (my notes) or Slack (my notes)?

Maybe change can be started simply, at the individual team level: watching tickets progress through the SDLC and get artifacts created and approved as early as possible.

Often less technical stakeholders believe the way to speed up the software development process is to speed up the coding part. In fact, that might not be where the bottlenecks really are.

Conclusion

Every company ships software a slightly different way, although I believe mostly with these same artifacts. A few of these artifacts may be more involved in certain areas, but I believe getting the movement of these artifacts correct is another thing that leads a company or project to the process / stabilization growth plane.

In fact, there’s another industry that cares very much about moving artifacts - goods - from point A to point B, while dealing with various dependencies: logistics.

Logistics is the part of supply chain management that deals with the efficient forward and reverse flow of goods, services, and related information from the point of origin to the point of consumption according to the needs of customers.

A careful look towards software logistics - making sure artifact dependencies happen easily, almost naturally, in the process of development - leads to increased visibility, predictability, implicit knowledge sharing, and project coordination.

I am almost certain there’s additional learnings from logistics as an industry that could help software development at a team, or organization, level.